Flash and JavaScript are required for this feature.

Download the video from iTunes U or the Internet Archive.

Instructor: Dennis Freeman

Description: In the next half of the course, periodic functions are represented as sums of harmonic functions, via Fourier decomposition. Linear time-invariant systems amplify and phase-shift these inputs to produce filtered output, an important new concept.

Lecture 14: Fourier Represe...

The following content is provided under a Creative Commons license. Your support will help MIT OpenCourseWare continue to offer high-quality educational resources for free. To make a donation, or view additional materials from hundreds of MIT courses, visit MIT OpenCourseWare at ocw.mit.edu.

DENNIS FREEMAN: Hello and welcome. Today, we're starting the second half of the class.

In the second half of the class, we'll focus on methods that will generally be described as Fourier methods. And those methods revolve around thinking about signals as being sums of sine waves. So we've previously thought about how you can think about a system by thinking about how it responds to sine waves. So this is closely related.

Now, instead of thinking about a system by how it processes these sine waves. Rather, we'll think about signals as being composed of sine waves. So that's sort of the idea. And today, we'll do the very simplest kind of representation, which is a Fourier series.

The importance of that's going to be our first encounter with how you can think about complicated signals as sums of sines and cosines. But also, we'll get a new representation for a system that will be generally useful called a filter. So that's the two agenda items for today. Roughly, the first half is on the Fourier series representations for a signal. The second half is on thinking about systems as filters, which is a direct consequence of the first.

So the first idea is just, why should we even think that you can think about signals in terms of sine waves? Well, there's very simple examples of that. Some signals are just very naturally described that way. One class of those are the ones whose entire frequency content are harmonics.

Just a bit of jargon, we'll think about harmonics of omega 0, harmonics of some frequency. Say, 1 kilohertz. Then we'll have the first harmonic. That's another name for the fundamental. That's 1 kilohertz, for example. Second harmonic would be some component at twice the frequency. Third harmonic, three times the frequency. 0-th harmonic for the completely-- so for no good reason at all, we'll call them 0-th harmonic the DC term, meaning Direct Current, which is neither direct nor has anything to do with current. But we will use that jargon because everybody else does. So that's just jargon.

What I want to think about though at a deeper level is, what kinds of signals should we expect have a good representation in terms of sums of sines and cosines? And one very easy kind of signal that you can think about is signals that we associate with musical instruments.

So think about the sounds that these instruments generate. And I'll play some audio. And while I'm playing it, what you're supposed to be doing is thinking about, what's the cues that this sounds like a sum of harmonics?

[PLAYING NOTES]

So using the things that we'll go over today in lecture, I can write a Python program, which I did, to analyze each of those sounds to expose its harmonic structure. And here's the results.

So for k equals 0, DC1, the fundamental, 2, the second harmonic, third harmonic, fourth harmonic. The lengths of the bars represent the magnitudes, how much energy-- well, roughly energy. How much signal is present at those harmonic frequencies as a function of harmonic number k for each of the different instruments?

And just like the time wave forms have a signature, there was a characteristic shape. In fact, if I go on, here is the characteristic shape. That's an actual wave form taken from the audio clip that I played.

That's a clip from the piano. This is a clip of the same time duration from the violin. A clip of the same time duration from the bassoon. And here are the harmonic structures for those different sounds. And you can see the harmonic structures look very different. So not much DC. A fair amount of fundamental, a lot of second. A lot of fundamental, not too much second. Not much fundamental. That's kind of interesting.

The bassoon is special in this class because there's not much fundamental, but there's a lot of second. So now, think about comparing these two and listen to them again.

[PLAYING NOTES]

So what I want you to try to think about-- and it's kind of hard. Think about how it is that the harmonic structure is playing a role in determining the timbre of the sound that you're hearing. OK. So that's kind of the motivation.

Another motivation is that thinking about signals in terms of their harmonic structure can provide important insights into the signals. And so for example in music, the harmonic structure is what determines consonance and dissonance. Dissonance. So I'm going to play two different notes that are related by an octave shift, by a fifth shift, and adjacent notes in the semitone scale. And below it, I've illustrated the harmonic structure for the D and for D prime. OK. So an octave above means that the first harmonic, the fundamental of this guy, corresponds to the second harmonic of this guy. The second to the fourth, et cetera.

There's some relationship for the fifths illustrated here. And there's a different relationship here. So what I want you to do is to hear the difference between those six sounds. And again, think about not only the time waveform, but also how their harmonics relate.

[PLAYING NOTES]

So dissonance has something to do-- well, consonance has something to do with the overlap of the harmonic structure and dissonance has something to do with the lack of overlap of the harmonic content. So the point just being that we can get some mileage out of thinking about signals according to their harmonic decomposition. So that's kind of the introduction.

How should we think about that? What kinds of signals ought to be representable by their harmonic structure?

OK. Well if you just draw a picture in a time domain and think about a fundamental, what would it look like in the time domain? What would the signal look like? What would the second harmonic look like? Well, that's a signal whose frequency is twice as big.

If the frequency is twice as big, the period is half as big. So there's now two periods of the blue guy in the same time interval as one period of the red guy. So the relationship of frequencies in the harmonics has a relationship in time. It's inversely proportional. OK.

So if you think about fundamental, second, third, fourth, fifth. Fundamental, second, third, fourth, fifth. There's a relationship in time of the periods. And one thing that should be clear is that these harmonics are all periodic in the period of the fundamental. So one thing that you can think about, by thinking about the time representation of what harmonic signals ought to look like, periodic signals are sort of our only candidates. All of the harmonics of a fundamental will have the same-- are periodic in capital T, the period of the fundamental. So it's going to be hard to generate a signal by a sum of harmonics that doesn't have that periodicity. So we should be expecting a relationship between signals that can be represented by a sum of harmonics and periodic signals.

So we would not expect an aperiodic signal to be represented this way. The counter question is, should we be expecting that all periodic signals can be represented this way, or some periodic signals, or some very special class? Is it a big class or a small class? How do we think about that? And historically, that's been a hard question. And by the end of the hour, I hope that you all understand why it was a hard question.

People, like Fourier, you might imagine, advocated the notion that you can make a meaningful harmonic representation for a very wide class of signals. Fourier claimed, basically, everything. Anything that was periodic could be represented in terms of a sum of harmonics.

Other people thought that was preposterous. In a widely reported public rebuke of Fourier, Lagrange said, this is ridiculous, because every harmonic is a continuous function of time. And you can easily manufacture a periodic signal that's a discontinuous function of time.

So for example, a square wave. How on earth are you-- Lagrange, how on earth would you represent a discontinuous function by a sum of continuous functions? That makes no sense.

So what we will want to do in the course of the hour is think about, does that or doesn't that make sense? And it will turn out the answer is kind of complicated. And so we can sort of forgive both sides for having sort of argued with each other because the answer is not completely obvious.

For the time being, I'm going to just assume that the answer is yes, that it makes sense to try to make a harmonic representation of a signal that might even be discontinuous. I'm going to assume periodic, let's try. And the question will be, does that make any sense?

So there's a little bit of math. There's a mathematical foundation that makes understanding these Fourier series very easy. The idea has two parts.

First off, if you have a sinusoidally-varying signal here at frequency k omega 0, the k-th harmonic. If you were to multiply the k-th harmonic times the l-th harmonic, a signal of frequency k omega 0 times a signal of frequency l omega 0, you get a new harmonic, the k plus l-th one. That's a simple property of the way the product of two exponents-- you add the exponents when you take the product of two exponentials. OK.

So that makes it very easy to think about harmonic structure because there's a simple relationship for generating one harmonic signal in time from another harmonic signal in time. The second thing that makes harmonics easy to think about mathematically is that if you integrate over a period-- we'll do this so often that we'll abbreviate it this way. Because if you have a signal that's periodic, it really doesn't matter which period you choose to do the integral over.

If you had a signal that was periodic in, say, capital T, and integrated it between 0 and T. And if it's periodic, you should be able to shift that window anywhere you like and you should get the same thing. So we'll not worry about whether it goes from 0 to capital T or from 1 to capital T plus 1. We won't worry about that. We'll just say integrate over the period T.

If you integrate over the period T any harmonic signal, then there's only two possible answers. So if you integrate e to the jk omega 0 T over one period of omega 0, there will be k periods in there. So if k is a number other than 0, you'll have complete periods of a sine wave. So the answer of the integral, the average value over that period, is 0.

The only time you'll ever get something that's non-zero is if k were 0. If k were 0, if it were DC, then e to the 0 is 1. The integral of 1 over a period, regardless of what period you pick up, the integral of 1 over a period is capital T. So two things.

It's easy to figure out the product of two harmonic signals. It's just the different harmonic. And integrals over a period are easy.

With that basis, then it's easy to say how-- let's imagine that some arbitrary periodic signal, which we can always write this way, x of t, if it's periodic, could also be written x of t plus capital T if x of t is periodic in capital T. Let's assume that that can be written as a sum of harmonics.

So a sum-- k equals minus infinity to infinity of some amount of e to the j omega 0 kt. Why do I have the minus k's in there? OK. I've asked this question about six times. Why do I have the minus k's in there? Yeah.

AUDIENCE: [INAUDIBLE]

DENNIS FREEMAN: Precisely. We like Euler's equation. We like complex numbers. We don't like cosine and sines. And we can convert sines and cosines into complex exponentials using Euler's formula and everything's very easy. So we use minus k for the same reason that we used minus omega in previous representations.

So imagine that we can write x of t, some generic periodic signal, as a sum of harmonics. Then, let's use those principles that we talked about before. If that can be represented as a sum, I can sift out the one of interest by multiplying x of t times e to the minus jl omega 0. We'll see in a minute what I mean by "sift out" and why I'm using minus instead of plus.

If you were to run this integral over a period-- and imagine for the moment that all the sums are well-behaved, so nothing explodes. And so all the convergence issues are trivial.

If the convergence issues were trivial, then I could swap the sum and the integral.

If I did that, then I would end up with a sum of a bunch of different k's. But for each one, I'd be taking some-- the k-th harmonic times the minus l-th harmonic. And that's going to give me 0 when I integrate over a period, except when k equals l.

If k equals l, then I'm going to get capital T. So the net effect of doing this is to generate T a l. It sifts out one of those coefficients. Well, that's nice. That means that if I assume I can write the signal this way, then I can sift out the k-th coefficient by integrating against minus the k-th and divide by T because of the normalization. Because if you integrate 1 over a period, you get capital T.

And that tells me then that if this were true, this is how you'd find the coefficient. So that leads to what we call a set of analysis and synthesis equations. So that if x can be represented this way, if we can synthesize a periodic signal x by adding together a bunch of harmonic components with a suitable weighting factor. The weighting factor a sub k certainly has a different magnitude, just like those musical tones that I played at the beginning, those musical notes. All of the different k's had different amplitudes. They also have different phases. The a sub k can be a complex number. We like complex numbers. Complex numbers are easy.

So if we can represent x by a sum of harmonics, complex valued. So weighted by a complex number, then we can sift out what those weight factors were by running the analysis equation. We can analyze the signal to figure out how much of the k-th component is there.

So the strategy then is assume that all works. That is to say, let's talk an ugly signal of the type that caused controversy back in the mid-1800s. Let's take a controversial signal like a square wave, assume it all works, and figure out what the ak's would have had to be. And then we'll figure out whether that made any sense.

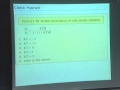

So if this thing were to be represented by a Fourier series, by a sum of harmonic components, how many of these statements would be true about those harmonic amplitudes? So as you start to write, look toward your neighbor and say hi. Then, write and figure out the answer.

[SIDE CONVERSATIONS]

OK. So how many of those statements are true? Raise your hand with a number of fingers between 0 and 5 would seem good limits. How many statements are true? OK, we've got about 40% correct. So that's a little under half.

What do I do first? If I want to figure this out, what would I do to try to figure out-- so statement 1, a sub k is 0 if k is even. Should that be true or false? How do I think about that? Yeah.

AUDIENCE: The square wave [INAUDIBLE].

DENNIS FREEMAN: The square wave's odd. OK. That sounds good. So how does that help me?

AUDIENCE: If there was an even term in the Fourier series, then that would mess up the oddness [INAUDIBLE].

DENNIS FREEMAN: So I might want to have only odd terms. How many terms in the Fourier decomposition are odd and even? What is the Fourier decomposition? So let's start by just writing some definition, right?

So I want to say that x of t is-- let's see. I have a period of 1. That's the same as x of t plus 1, right? And I want to write this as some sort of a Fourier thing. So what do I write next?

I want to say that this signal can be represented by some sum of something. And I'm going to have to put some weights in. And I'm going to want something like e to the minus j 2 pi kt by T, something like that. And I'm going to need a whole bunch of these. Where does even and odd show up?

So if I were to rewrite this as sines and cosines. So I could rewrite this in terms of some sines and some cosines. Then, the decomposition into even and odd is easier. That's correct. So let's get back to this one. How do I think about this one, though?

So I want k to be even. What does k even correspond to? OK. Let's assume I don't know what k even. How do I do this if I don't know anything? How do I just grunt through it? Yeah.

AUDIENCE: Just do the integral.

DENNIS FREEMAN: Just do the integral, exactly. So what I'd like to do is say that a sub k is 1-- so assuming everything works. Assuming everything works, then a sub k should be the integral over t weighted by 1 over t, x of t e to the minus j 2 pi kt over T. I should have put a plus there. I want the minus sign in one of the formulas and not in the other one. OK.

And if you just think about that integral, it's not very hard. So I want this kind of thing. Capital T is 1. So I get two pieces, the piece that corresponds to the minus a 1/2 and plus 1/2. And when I integrate them, I get something that's a little bit-- well, no. It's pretty straightforward, right?

So you integrate this thing, the 1 over minus-- and so the minus j 2 pi k flips into the bottom. The 1/2 turns it into j 4 pi k-- blah, blah, blah. You get four pieces. These two pieces correspond to 1. They sum to 2. So you get that.

The point is that it's a pretty simple transformation to think about the square wave turning into a sequence of amplitudes. So if you choose-- let's see. So if you choose k to be even, then I've got an integer multiple of 2 pi.

If k were even, then I would have an integer multiple of 2 pi. e to the integer multiple of 2 pi flips around to 1. As does the minus 1. That kills this stuff. OK. So that's where the k even and k odd comes from.

So the point is that there's a simple way to just crank through it and we get a simple representation. So we get that ak is 1 over j pi k, k odd, 0 otherwise. OK. And then we can just sort of run down through the list. We'll see in a minute why this particular list of properties is interesting.

So a sub k is 0 if k is even? Yep.

ak is real? Nope, there's a j in it. ak magnitude decreases with k square? Nope. Decreases with k.

There's an infinite number of non-zero terms? Yup. k goes to infinity.

All of the above? Obviously not. The point is it's simple to do and you end up representing the time waveform by just a sequence of numbers.

How big is the fundamental? How big is the second harmonic? How big is the third harmonic, et cetera. There's some more math that makes things even easier.

There are ways of thinking about operations in time as equivalent operations in harmonics. That makes the idea even more powerful because what we are developing is an alternative representation for signals. The same as we had multiple representations for systems-- differential equations, block diagrams, h of t, h of s, h of r, Bode plots, all those different representations of systems, this represents an alternative representation of a signal. We think about it being represented in time or we think about it being represented in harmonics. Or eventually, we'll call that frequency. So we'll think about signals being represented in time or frequency.

And you can think about operations in time having a corresponding operation in frequency. So illustrated here, if a signal is differentiated in time, the Fourier coefficients are multiplied by j 2 pi over T k. OK, well that's easy.

If we assume for the moment that we can represent x as this kind of a sum, differentiation is linear. The derivative of a sum is the sum of the derivatives. The derivative of sine wave is easy. And we end up with the idea that if you knew the a sub k's, then you can easily formulate the series representation for the harmonic structure by just multiplying each of the ak's by the power of the exponent. OK. So that gives us a way of thinking about signals in time as being represented in frequency. So here's an illustration of that.

Imagine that we have a triangle wave rather than a square wave. Answer the same questions. And be aware that it's a trick. By "trick," I mean you could use the obvious approach, which is the one we just did. Or you could use some insight from the last slide and do it in one step.

So how many of these statements are true? Everybody, raise your hand. Raise the number of fingers equal to the number of correct statements.

We've got complete switch of who's right. And still, 40% correct. That's phenomenal. So we could just go through the list.

What's the trick?

AUDIENCE: [INAUDIBLE]

DENNIS FREEMAN: So close. So integral, exactly. Right. This signal is the integral of the previous signal. So that means that we ought to be able-- there's some kind of a simple relationship between this series and the series that represents that one. What should I do to this one to make it look like that one?

AUDIENCE: [INAUDIBLE]

DENNIS FREEMAN: Divide by j 2 pi k, precisely. So I can think about the bk's being the ak's, but then I have to worry about the representation for differentiation. Or here, integration in the frequency domain. In the frequency domain, integration corresponds to dividing by j 2 pi k.

So now, if I think about the list of questions, is b0 for k even? Yeah, it was before. It is now. Because all we're doing is changing the weights. And if the weight started out 0 and we're multiplying by something, we can't get it to be different from 0.

Is it real-valued? Well, now it is because I had a j times j. So now they are real-valued.

Does it decrease with k squared? Now it does because I got one of the k's from the differentiation operator and the other k from over here.

Infinite number. Of course, the old one did, too.

All of the above? Yes. OK. So that was just supposed to be a simple exercise in how to run the formulas, how to do the stuff from a purely mechanical point of view.

Now I want to think about the bigger question. Does any of this make any sense? And the answers were concocted to help me make sense of that. The questions, the check yourselves, were concocted so that if we think about the answer that came out, we will have some insight into why we think it works and why it was controversial 150 years ago. So let's do the simple case first.

Even Lagrange wouldn't have any trouble with this-- well, maybe he would have had a little trouble. I guess he would have had a little trouble.

So Lagrange had the problem with Fourier that Fourier was making these outrageous claims that any periodic function should have a Fourier decomposition. And if you have a discontinuous function, and if all the basis functions are continuous, how could that make any sense? This doesn't have any discontinuities, but it does have slope discontinuities. That's sort of the same thing.

If you have a slope discontinuity and you're trying to make-- you're trying to represent the signal by a sum of signals, none of whom have slope discontinuities, that's kind of the same kind of problem.

One way we can think about it is to think about how the approximation gets better, or fails to get better, as we add terms. So this is the base function. This is the signal that we would like to represent harmonically.

If we just think about the first non-zero term, the first non-zero term is the fundamental. It actually is not bad.

If we were willing to accept errors about this big, it would be OK. But somehow, it doesn't capture much triangliness. It sort of doesn't look much [INAUDIBLE]. So the one term sort of catches a lot of the deviation, but it doesn't capture the triangle aspect.

If you add another term-- the next non-zero term is the third one. So if you write the sum of the first term, the k equals 1 term and the k equals 3 term, you get this waveform. Notice that the effect of that was to creep it toward the underlying signal.

If you add another term-- 5, 7, 9-- it's getting closer, closer, closer. 19 because I got bored making the figures, so I skipped a bunch. 29, because I skipped a bunch. 39. You can see that what's happening is as we add more and more of those terms, by the time we got up to number 39, it's pretty good. So that probably from your seat, it's not altogether apparent that they're different. They're still different. But you can see that there is a sense in which the approximation is converging toward the underlying signal. That's good. So that's a sense in which there is a convergence between the signal and its Fourier representation.

A different kind of thing happens here. Now, let's do the square wave.

If we put in the first term of the Fourier representation, which is the fundamental, we get a sine wave approximation which is a little worse perhaps for a square wave than it was for a triangle wave. Put in the 3rd, 5th, 7th, 9th, 19th-- so I threw in 5 again-- 29, 39. It's not doing as well.

What's not doing as well? So we go one-term partial sum-- 2, 3, 4, 5, 20. 10, 15, 20. Yeah.

AUDIENCE: It's not able to handle the really quick transitions.

DENNIS FREEMAN: It's not able to handle the really quick transition. It's kind of like Lagrange said, right? How are you going to make a discontinuous function out of a sum of continuous ones? It's hard.

In fact, this is called Gibbs phenomena, after the guy who sort of formulated the mathematics of this. Even in the limit, no matter how many terms you put into the sum, the worst case deviation is about 9%. You can't get rid of it. So in some sense, Lagrange is right. You can't put enough terms in there so that it converges everywhere.

So the problem then becomes that we can't really think about convergence in the familiar ways we like to think about convergence. Convergence at a point is not a good way to think about it. Because there's always a point that deviates a lot.

And in fact, in signal processing we have lots of other ways we think about convergence. So in addition to being 9% overshoot, the Gibbs phenomenon, what else do you see as the trend as you increase the number of terms in the partial sum? In addition to converging and staying sort of the same height, what else happened as I added terms to the partial sum? It got skinnier.

The deviations got crammed toward the transition point. And if I keep adding them, they'll get crammed more and more in there. And it's not like an impulse.

The height didn't get bigger. The width got smaller. Had the height got bigger, we'd have been in trouble. Because then we would have said it deviates from the square wave by having an impulse at the transition. It doesn't have an impulse.

The height's staying the same. The width is smashing towards 0. The energy in that signal is getting smaller and smaller. So a different way to think about convergence is, how big is the energy difference between the two signals?

In that sense, this converges and Fourier's right. As you add terms, the energy difference between the two signals goes to 0. That's the modern way of thinking about the controversy. There is an issue.

If we choose to think about convergence in terms of energy difference, then we can resolve the problem. That's what we'll do.

That's the reason in lots of the things that we talk about, we haven't been too worried about single points being off of curves. That's come up in some of the homeworks that we don't really care too much that-- say you have a function that is 1 everywhere except one point. There's no energy difference between the signal that differs by 1 point and the original. It has a finite height, but there's 0 width. So there's no energy difference. So that's the reason we haven't been so worried about that because we use this kind of a definition of equality. They're equal if there's no energy difference between them.

And using that kind of a way of thinking about things, we get to write Fourier decompositions, harmonic decompositions, for a wide range of signals. Not all of them, right? Fourier exaggerated. But for a wide range of signals.

In fact, the definition we will typically use, is signals with finite energy we'll be able to do this. So now what I want to do is the last part of this lecture, I want to introduce how thinking about a signal in terms of its harmonic structure lets us think about systems differently.

So here, the idea is we previously thought about systems as frequency responses. In a frequency response, we use the eigenfunction property. You put in an eigenfunction, one of these complex exponentials. You get out the same complex exponential if the system is linear time invariant. And the eigenvalue is the value of the system function evaluated at the frequency of interest. We've done that before.

The neat thing that happens if you think about Fourier series is that you can break down the input into a sum of discrete parts. The first harmonic, the 0-th harmonic, the second harmonic, the third harmonic. And now, each one of those parts was an eigenfunction. And each one of those parts gets preferentially amplified and/or time-delayed according to the frequency response idea for the system. That lets us think about the effect of a system is to filter the input.

The idea in a filter is that systems do not-- LTI systems, Linear Time Invariant systems-- the same kind of systems that we've been thinking about all along so far. Linear time invariant systems cannot create new frequencies.

If you put some frequency in, that's the frequency that comes out. We've already seen that when we talk about frequency response.

They can only scale the magnitude and shift the phase. They can't make new frequencies all they can do is change the amplitude and the phase. And that's a very interesting way to think about a system.

Think about a low-pass filter. Think about a system whose input is little vi and whose output is little vo. You all know how to characterize that a gazillion ways. You would know how to write a differential equation for that. Nod your head yes. Yes, yes, yes? You would know how to write that as a differential equation. You'd be able to write a system function. You'd be able to do a frequency response, a Bode plot, all those sorts of things. That's fine.

What I want to do is think about it instead as a filter. So imagine the Bode plot. The Bode plot is good, right? So imagine the Bode plot representation for the RC low-pass filter. So the RC low-pass filter has a single pole. The pole is at the frequency 1 over RC. Minus 1 over RC. Bode plot looks like so. Magnitude has an asymptote at low frequencies that is a constant. At high frequencies, it's sloping with minus 1. Or, as we would say, minus 20 dB per decade. And it has some sort of phase response. Low frequencies, the phase is 0. High frequencies, the phase lags by pi over 2.

But now, let's think about exciting it with a square wave. Square wave is what we've been thinking about.

Square wave has a harmonic structure that falls with k. So if we represent k equals 1, 3, 5, 7, 9 on a log scale, falling with k is a linear slope. So this linear decrease in amplitude height is the reciprocal over here. It's the idea of a log, right?

So we can represent the signal, the square wave, by this amplitude spectrum. How big are the components at all of the different frequencies? Well, there's only a discrete number of them. There's the k equals 1 one, the k equals 3 one, the k equals 5 one, et cetera. Each one represented by a straight line. And they all have phase of minus pi over 2 because of the j.

Now, if you were to choose the base frequency for the square wave so that 2 pi over capital T omega 0 is small compared to the cutoff frequency for the low-pass filter, what would be the effect on these amplitudes of this filter?

Well, the magnitude is 1. So if you go to arbitrarily low frequencies, the magnitude is arbitrarily close to 1. If the phase is arbitrarily close to pi over 2, what would happen? Excuse me, the phase would be arbitrarily close to 0. Sorry. I'm supposed to be going to very low frequencies.

If I go to very low frequencies, the phase is 0. If the amplitude is arbitrary close to 1, the phase is arbitrarily close to 0. And if the input is represented by the red lines, what's the output represented by? Same red lines, right? Each red line is multiplied by 1 at angle 0. So the output has the same shape as the input.

If, however, I shift the period to be shorter, so that omega 0 is higher, then I can have some of the higher frequencies fall into the region of sloping magnitude. I can have some of the phases fall into the region where the phase is minus pi over 2.

The way to think about it is the fundamental is still going through the place with unity gain phase of 0. The basic shape is determined by the fundamental. The basic shape comes through. But now the high frequencies are being altered and their phase relationship is being altered. And that's why the shape is different.

If you go to still higher frequencies, more of the components are filtered. Each component gets sent through the frequency response. And its amplitude and phase are modified appropriately.

And if you go to extremely high frequencies, then all of the magnitudes are multiplied by this sloping down line. We turn k convergence-- we turn the reciprocal in k into a reciprocal on k squared.

Where have we seen that before? That was the decomposition for the triangle wave. So the answer comes out looking like a triangle wave.

The point being that in addition to thinking about how the system works in terms of feeding frequencies through it, now we think about how signals work by breaking them up into frequency parts. And the whole system then is reduced to, how does the system modify that component? So that gives rise to the idea of thinking about the system as a filter. LTI systems do not generate new frequencies. All they do is modify the amplitude and phase of existing frequencies.

If you enumerate all of the existing frequencies, you can just go through them one by one and figure out how they come out. And that's an equivalent way of thinking about an LTI system. And that's the kind of a focus that we'll use in the remaining part of the course. OK,